About me

I live with my wife in Martigny (Valais, Switzerland) and work as a postdoctoral researcher at the Idiap Research Institute.

I grew up in the Chablais valaisans, near to my actual position, and I studied at EPFL

where I obtained a Master (MSc) in Microengineering (2016) with a specialization in "Robotics and Autonomous Systems" and

later a PhD in machine learning (EDEE, 2021).

During my freetime, I practice Judo at the Judo Club Saint-Maurice, which I am

technical director. I study this martial art since 25 years and reached the 3rd Dan.

I train myself one or two times a week, teach children, and assist them during tournaments.

I also like to play videogames and tabletop roleplaying games with my friends.

Current Research

I am currently working at Idiap as a postdoctoral researcher in the Perception

and Activity Understanding group. I mainly work on research projects, but also perform teaching assistance tasks in the frame of the

Master in AI from UniDistance and take part in dissemination activities (see "Talk and Media" section).

I am interested in machine learning, data analysis, and their application in general.

I also have a special interest in the following topics:

- human perception and its application to activity understanding and human-computer/robot applications;

- interpretable and explainable models;

- deployement of machine learning models.

Past Activities

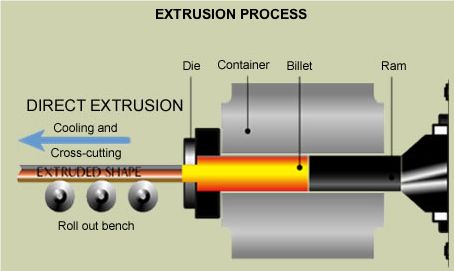

2021-2023 I worked on the P3 project ("Innosuisse" funding, SNSF) with the HES-SO and a company that wanted to improve their manufacturability study

for new ordered parts as well as the optimization of process parameters. The outcome is a web interface that provide a tool to browse past produced parts using visual drawings comparison and a model

that predicts important processing variables depending on the process parameters. One interesting apsect of this last task was the search for deep learning models that learnt (or are forced) to fulfill

physical laws related constraints.

Project review in brief (Idiap website)

2021-2022 In the frame of the NATAI project ("Agora" funding, SNSF), I collaborated with the Musée de la Main (CHUV, Lausanne) and provide them a live gaze tracking demo for their exhibition on artificial intelligence. The result is an interactive gaze-driven soundscape, i.e. an autonomous demonstrator that displays a scene, estimate what the user is looking at, and adapts the audio accordingly.

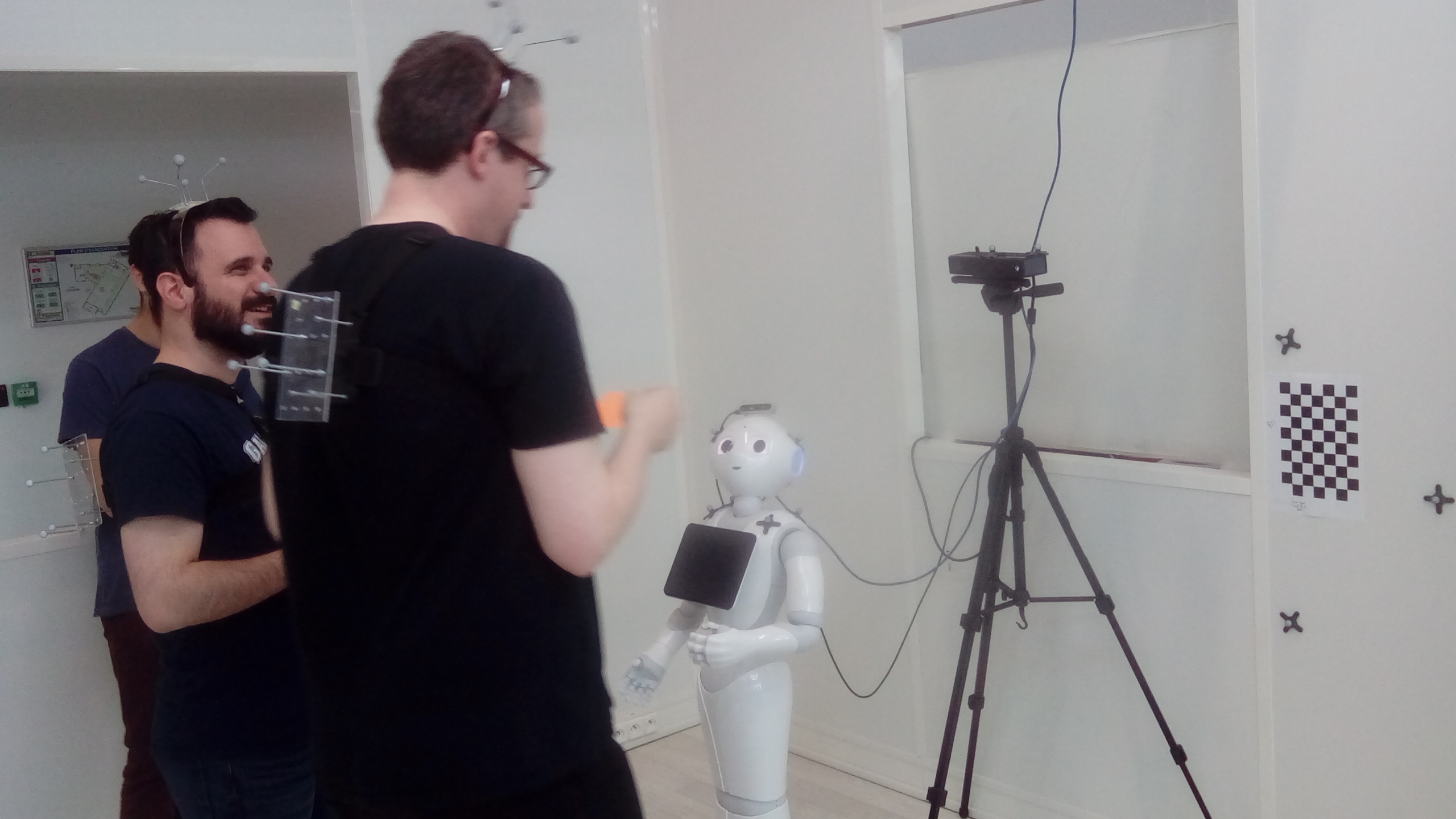

2018-2021 The main funding for my thesis comes from the MuMMER project ("Horizon2020" funding, EU),

where I worked on modeling and infering attention in human-robot interactions. Exploiting color and depth images as well as audio data, my goal was to estimate

the individual attention of a group of people that is interacting with a robot in order to better understand conversations dynamic. I explored different topics

and tasks, like unsupervised gaze estimation calibration, eye movements recognition, and attention estimation in arbitray settings.

MuMMER project - Results in brief (EU, CORDIS website)

Paper presenting the lastest version of the robot system developed during the MuMMER project (arxiv paper)

Demo of the Idiap perception module in the MuMMER project (youtube video)

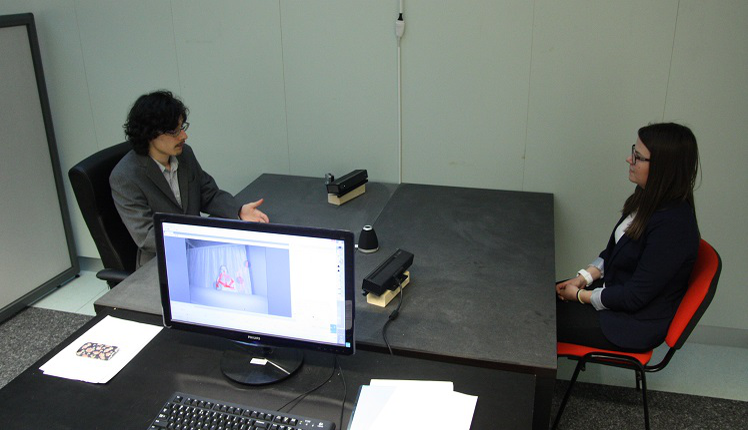

2017 During my PhD studies, I participated in the UBImpressed project ("Synergia" funding, SNSF), whose goal was to study the role of non-verbal behaviours in the building of the first impression and improve the training of employees in hospitality. In this frame, I mainly worked on gaze estimation and calibration.

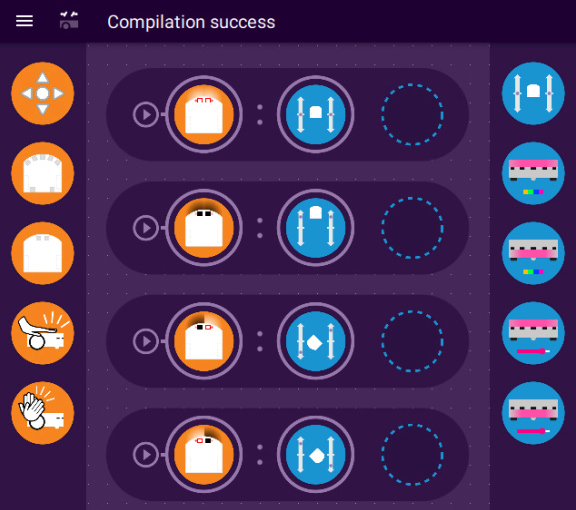

2016 I did my Master Project at MOBOTS (EPFL) under the supervision of Francesco Mondada. I worked in the field of learning analytics with mobile robots. I worked on methods that use the logs taken during a robot programming lecture to provide useful information to teachers and students in order to increase the learning outcome of lectures. I was then hired for 6 more monthes to continue my master project and develop a tool that provides online hints to students learning robotic programming based on the results of my master project.

2015 I worked during 7 monthes for senseFly (in Cheseaux-sur-Lausanne) on the motor control of their quadrotor and the development of a new camera interface (hardware) for a fixed-wing drone.

2014-2015 During my studies, I performed two semester projects: one on the implementation of safety behaviour on quadrotor formation (at DISAL (EPFL)) and a second on the design of legs for a quadruped robot (at BIOROB (EPFL))

Talk and Media

24.04.2023 - Communication on P3 project results

04-06.04.2023 - Presentation of AI research to high school students in the frame of the "semaine spéciale pour les gymnasiens" organised by the Musée de la Main.

from 01.04.2022 to 31.03.2023 - Live gaze tracking demo for an exhibition on artificial intelligence at the Musée de la Main (CHUV, Lausanne) (article from 24heures, RTS news).

10.05.2022 - Talk entitled "Thinking without brain: the robotic logic" organised by Sciences Valais in Sion, in the frame of the international Pint of Science Festival.

02.12.2021 - Public thesis defense - EPFL announcement.

20.06.2021 - Talk at CVPR'21 (GAZE2021 workshop) to present our paper "Visual Focus of Attention Estimation in 3D Scene with an Arbitrary Number of Targets".

11.09.2021 - Demo of a "gaze controlled soundscape" to show how a user can interact with a computer using the gaze for the Idiap 30th anniversary.

26.06.2019 - Poster presentation at ETRA'19 to present our paper "A Deep Learning Approach for Robust Head Pose Independent Eye Movements Recognition from Videos".

29.03.2019 - "Portrait de chercheur" (youtube video in french) made by Sciences Valais.

21.01.2019 - Poster presentation at CUSO Winter School 2019 on Deep Learning.

29.08.2018 - Demo of a head, gaze, and visual attention tracker for the Idiap's Innovation Days 2018 (video from the "20 Minutes" newspaper).

16.11.2017 - Talk at ICMI'17 conference to present our paper "Towards the Use of Social Interaction Conventions As Prior for Gaze Model Adaptation".

Publications

Deep learning-based tools leveraging production data to improve manufacturability

R. Siegfried, M. Villamizar, S. Devènes, R. Rey, A. Bacha, B. Morere, S. Zahno, J.-M. Odobez

(under review)

Robust Unsupervised Gaze Calibration Using Conversation and Manipulation Attention Priors

R. Siegfried and J.-M. Odobez

ACM Transactions on Multimedia Computing, Communications, and Applications, Volume 18, Issue 1, January 2022

Modeling and Inferring Attention between Humans or for Human-Robot Interactions

R. Siegfried

EPFL Thesis, 2021

Visual Focus of Attention Estimation in 3D Scene with an Arbitrary Number of Targets

R. Siegfried and J.-M. Odobez

IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPR-GAZE2021), Virtual, June 2021

ManiGaze: a Dataset for Evaluating Remote Gaze Estimator in Object Manipulation Situations

R. Siegfried, B. Aminian, and J.-M. Odobez

ACM Symposium on Eye Tracking Research and Applications (ETRA), Stuttgart, June 2020

MuMMER: Socially Intelligent Human-Robot Interaction in Public Spaces

M. E. Foster, O. Lemon, J.-M. Odobez, R. Alami, A. Mazel, M. Niemela, et al.

AAAI Fall Symposium on Artificial Intelligence for Human-Robot Interaction (AI-HRI), Arlington, November 2019

A Deep Learning Approach for Robust Head Pose Independent Eye Movements Recognition from Videos

R. Siegfried, Y. Yu and J.-M. Odobez

ACM Symposium on Eye Tracking Research & Applications (ETRA), Denver, June 2019

Facing Employers and Customers: What Do Gaze and Expressions Tell About Soft Skills?

S. Muralidhar, R. Siegfried, J.-M. Odobez and D. Gatica-Perez

International Conference on Mobile and Ubiquitous Multimedia (MUM), Cairo, November 2018

Towards the Use of Social Interaction Conventions As Prior for Gaze Model Adaptation

R. Siegfried, Y. Yu and J.-M. Odobez

ACM International Conference on Multimodal Interaction (ICMI), Glasgow, November 2017

Supervised Gaze Bias Correction for Gaze Coding in Interactions

R. Siegfried and J.-M. Odobez

Communication by Gaze Interaction (COGAIN) Symposium, Wuppertal, August 2017

Improved mobile robot programming performance through real-time program assessment

R. Siegfried, S. Klinger, M. Gross, R. W. Sumner, F. Mondada and S. Magnenat

ACM Conference on Innovation and Technology in Computer Science Education (ITiCSE), Bologna, July 2017

Resources

Code and data: Unsupervised gaze estimation calibration in conversation and manipulation settings (GitHub)

In our 2021 journal paper, we introduced a method to allow the unsupervised calibration of a gaze estimator using contextual prior based on top-down attention (i.e. related to the current task).

The data used in our experiments is available here: Idiap page Zenodo

ManiGaze dataset (Idiap page)

The ManiGaze dataset was created to evaluate gaze estimation from remote RGB and RGB-D (standard vision and depth) sensors in Human-Robot Interaction (HRI) settings, and more specifically during

object manipulation tasks. The recording methodology was designed to let the user behave freely and encourage a natural interaction with the robot, as well as to automatically collect gaze targets,

since a-posteriori annotation is almost impossible for gaze.

VFOA module (GitHub)

A python package for the basic visual focus of attention estimation of people in a 3D scene (geometrical and statistical models).